Proteins are the fundamental building blocks of life and play essential functional roles in biology. We present a multimodal deep learning framework for integrating around one million protein sequences, structures, and functional annotations (MASSA). Through a multitask learning process and five specific pretraining objectives, fine-grained protein domain features are extracted. Through pretraining, the multimodal protein representation achieves state-of-the-art performance in specific downstream tasks, such as protein properties (stability and fluorescence), protein-protein interactions, and protein-ligand interactions, while also yielding competitive results in secondary structure and remote homology tasks.

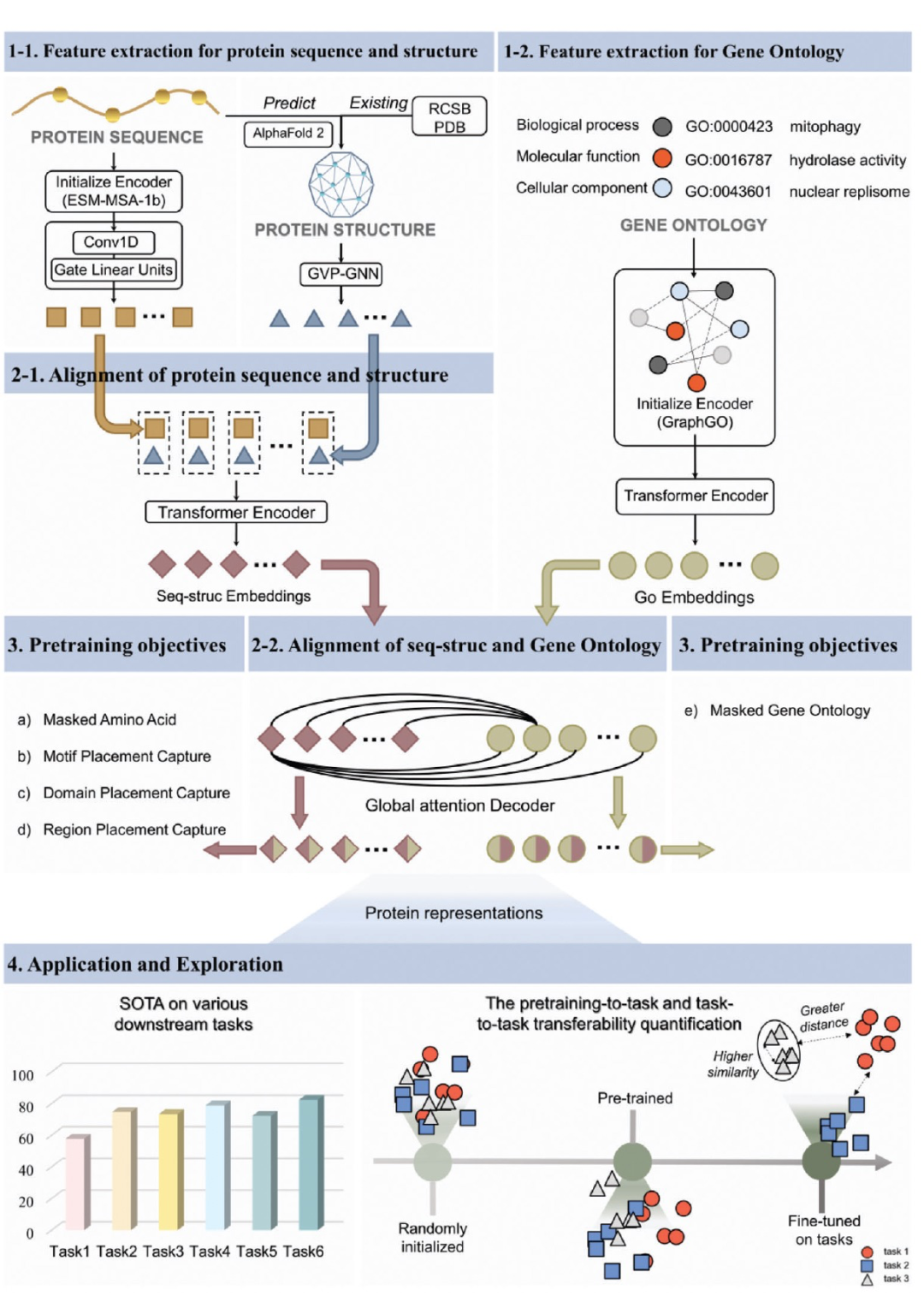

Proteins, as the fundamental building blocks of life, play crucial functional roles in biology. Natural proteins are formed by connecting amino acids through peptide bonds, creating linear sequences that fold into their 3D or tertiary structures to perform their biological functions. Understanding protein characteristics from their sequences, structures, and functions is one of the most significant scientific challenges of the 21st century, as it is crucial for elucidating disease mechanisms and drug development. In recent years, the explosive growth of protein data, such as sequences, structures, and functional annotations, has provided a rich resource for using computational methods, particularly artificial intelligence, to study proteins. Proteins can be regarded as the natural language of biology, composed of multiple amino acid "words," making NLP language models well-suited for protein research. Corresponding protein representations have shown remarkable performance in many protein-related downstream applications, such as predicting protein stability and mutation effects. However, proteins are not solely composed of linear sequences of amino acids, and inferring the entirety of a protein solely from sequence data is challenging. Incorporating protein structure or functional annotations into language models is a relatively recent development. Although such approaches have enhanced model performance and applications, challenges remain. Many unique and fine-grained protein properties, such as functional domain units, have yet to be fully incorporated into pretrained models. Additionally, there is currently no metric to quantify how well a protein representation has been pretrained and its applicability to downstream tasks. Here, we introduce MASSA, a multimodal protein representation framework that integrates domain knowledge of protein sequences, structures, and functional annotations (see Figure 1). The generated protein representation will be used for downstream tasks and quantified through cross-task learning processes. It is noteworthy that the model can accept inputs containing only sequence data for downstream applications. When a protein sample comprises three modalities, they are all treated as input, while for samples lacking modalities, such as structure and gene ontology term information, they are processed as masked tokens.

Data Sources

We collected multiple datasets, including protein sequences from UniProt, protein structures from the RSCB PDB and AlphaFold protein structure databases, Gene Ontology (GO) annotations, and protein domain, motif, and region information from UniProtKB. After preprocessing, the constructed multimodal dataset comprises approximately one million samples of sequences, structures, GO annotations, protein regions, motifs, and domains. The quantities and original formats of these multimodal data are illustrated in Figure 2a.

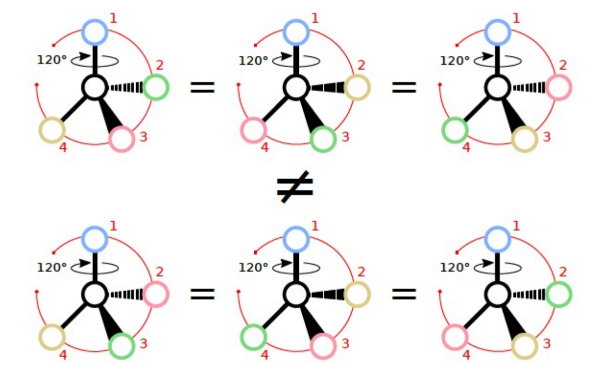

In these data, protein sequences, structures, and GO annotations are used as training inputs, while regions, motifs, and domains are employed as training targets. Specifically, the term "region" refers to biologically relevant segments within a sequence, such as amino acids 346 to 375 of Q8BUZ1, corresponding to its interaction with actin. Motifs are collections of secondary structures forming specific geometric arrangements that perform distinct protein functions. Due to their short length and high sequence variability, most motifs cannot be accurately predicted through computational methods. In contrast, domains are independent three-dimensional structural folding units that often function autonomously from other components of the protein.

In Figure 2b, the sequence set "SSLSA" within the illustrated protein sample represents motif 1. Each amino acid in this section has its own position, knowledge, and category. To embed this information, we adopted an approach similar to named entity recognition strategies in natural language processing. Specifically, we treated each category as a named entity and subdivided each entity into individual amino acid combinations, which were then classified. This approach follows the BIOES tagging scheme. For instance, motif 1 can be divided into the set {S, S, L, S, A}, and the corresponding category set is {B−Motif1, I−Motif1, I−Motif1, I−Motif1, E−Motif1}. B−Motif1, I−Motif1, and E−Motif1 represent the "beginning," "interior," and "end" components of motif 1, respectively. Additionally, non-entity amino acids (marked in black) are labeled as "O" (outside entity). Entities with only a single label are marked as "S" (single), such as S−Motif3.

Following the named entity preprocessing, we analyzed the distribution of categories. There are 1364 motif categories, 3383 domain categories, and 10628 region categories. As shown in Figure 2c, all of these distributions exhibit a long-tail pattern, often leading to sensitivity in model training. Therefore, we merged smaller subcategories within each long-tail distribution into a single "other" category. Ultimately, we obtained 130 motif categories, 712 domain categories, and 222 region categories.

model part

We conducted pretraining of the MASSA model using the constructed multimodal dataset. The pretraining process consisted of three steps (as depicted in Figure 1): feature extraction for each modality of sequences, structures, and functional GO annotations. Token-level self-attention was employed to align and fuse sequence and structure embeddings. Subsequently, the obtained sequence-structure embeddings were globally aligned with GO embeddings. The model was pretrained using five protein-specific objectives, including masked amino acid/Gene Ontology prediction and localization of structural domains/motifs/regions.

In the first step, initial sequence and GO embeddings were provided by the protein language model ESM-MSA-1b and our proposed graph convolutional network for GO terms, GraphGO. ESM-MSA-1b is a protein language model pretrained for masked language modeling, a widely used objective in pretraining protein language models, which we also employed in our model. As mentioned earlier, this objective originates from natural language processing and aims to extract semantic information from a large number of amino acid sequences. The input sequences were processed through ESM-MSA-1b to obtain the initial sequence embeddings.

Gene Ontology (GO) describes various protein functions, covering three ontology categories: biological process, molecular function, and cellular component. To obtain initial GO embeddings, we developed and trained a model called GraphGO (as shown in Figure 1). Specifically, we constructed a graph containing 44,733 GO nodes and 150,322 edges. In GraphGO, we utilized three graph convolutional network (GCN) layers and two training objectives, link prediction and node classification, to extract hidden features. Following GraphGO training, the two considered evaluation metrics, AUC for link prediction and accuracy for node classification, reached high levels (close to 0.82), as shown in Figure 2d. Additionally, t-SNE visualization of GO embeddings (Figure 2e) demonstrated excellent clustering results for the three ontology categories after training. These results indicated that GraphGO had learned reliable representations of GO terms.

MASSA was pretrained on the constructed multimodal dataset using a balanced multi-task loss function to accomplish the five protein-specific pretraining objectives. During the pretraining process, the multi-task loss continued to improve after several epochs (Figure 2f), consistent with previous research findings. Pretraining was stopped after 150 epochs, and subsequent evaluation of the model was performed for downstream tasks.

Experimental results

We conducted an analysis of various protein property benchmarks included in TAPE, including secondary structure, remote homology, fluorescence, and stability benchmarks. On these sequence-based datasets, the model's performance was evaluated against other methods in two different ways: with or without pretraining objectives. "Without pretraining objectives" (comprising only steps 1 and 2 from Figure 1) indicates that the model is trained from scratch on downstream tasks, while "with pretraining objectives" (involving steps 1-3 from Figure 1) means that the model is fine-tuned after full pretraining. Both experimental groups utilized only protein sequences as input. The distinction lies in the fact that the "with pretraining objectives" group can benefit from pretrained knowledge.

Different downstream tasks involve different types of labels. For instance, the stability benchmark is a regression task, where input protein X is mapped to a continuous label Y to predict the protein's stability. As shown in Figure 3b, on this task, the model with pretraining achieved a Spearman's R value of 0.812, outperforming the model without pretraining (R=0.742), indicating substantial benefits of pretraining on this task. Compared to other methods, as depicted in Figures 3c and 3d, the model achieved state-of-the-art performance on all tasks in the experiments without pretraining objectives.

Results from ablation experiments (Figure 3e) suggest that initial sequence embeddings from ESM-MSA-1b are crucial for these subsequent tasks, especially for remote homology. Consistent with other research, multiple sequence alignments and masked language modeling are advantageous for protein structure-related tasks, such as secondary structure and remote homology. In contrast, the information obtained from our proposed multimodal fusion and fine-grained pretraining objectives is more beneficial for biophysical-related tasks, such as stability and fluorescence. The gap between results from the ablation group and the full model (Figure 3d) collectively indicates that only by integrating all modules together can optimal performance be achieved.

The model was evaluated on several protein-protein interaction benchmarks, including SHS27k, SHS148k, STRING, and SKEMPI. Among these, STRING, SHS27k, and SHS148k are multi-label classification benchmarks, while SKEMPI is used for regression. More detailed information about these datasets can be found in the experimental section. We selected four methods for comparison, including PIPR, GNN-PPI, ProtBert, and OntoProtein, and evaluated the model on SHS27k, SHS148k, and STRING benchmarks. Our multimodal model outperformed other methods on all these benchmarks. For these PPI benchmarks, the full model received input from all three modalities (sequence, structure, and GO) and ablation studies were conducted to assess the effect of each modality. Five experimental groups represented different modality combinations: 1) random initialization, 2) sequence only, 3) sequence + structure, 4) structure + GO, and 5) sequence + GO. As demonstrated, the performance of groups 3 to 5 was better than group 2, indicating the advantage of combining multiple modalities for all these PPI datasets. Among these results, groups 3 and 5, which involve combining sequence with another modality, achieved relatively better results, confirming the significance of sequence information.

Conclusion

In recent years, AI-based computational methods for learning protein representations have grown in number, and they are crucial for downstream biological applications. Most existing methods typically use only a single data format, such as protein sequences. However, protein knowledge can be accumulated from various types of biological experiments. In this study, we introduced a multimodal protein representation framework for integrating domain knowledge from protein sequences, structures, and functional information. Through carefully designed pretraining processes, we created a versatile protein representation learning tool.